The Sansa Waitlist Is Now Open

The Sansa Waitlist Is Now Open

We built Sansa because we lived the problem. At our previous company, our LLM API bills kept climbing month after month. We were paying premium prices for every request, even when simpler models would have sufficed. The cognitive overhead of constantly deciding which model to use for each scenario slowed us down, and we still weren't sure we were getting the best results for our money. When we finally built a system to intelligently route requests behind the scenes, we discovered we could cut costs by 60-90% while actually improving quality. That realization became Sansa.

Sansa is an AI API that delivers better AI at half the cost. Instead of manually choosing between GPT-5, Claude Haiku, Gemini Flash, and dozens of other models for every request, a tedious, error-prone process that never ends as your product evolves, Sansa handles it automatically. Behind the scenes, Sansa intelligently routes each request to the optimal model based on what you're trying to accomplish. You get the same simple API interface you're used to, but with smarter decisions happening automatically. The result is dramatically better AI performance at a fraction of the cost, without the mental overhead or quality compromise.

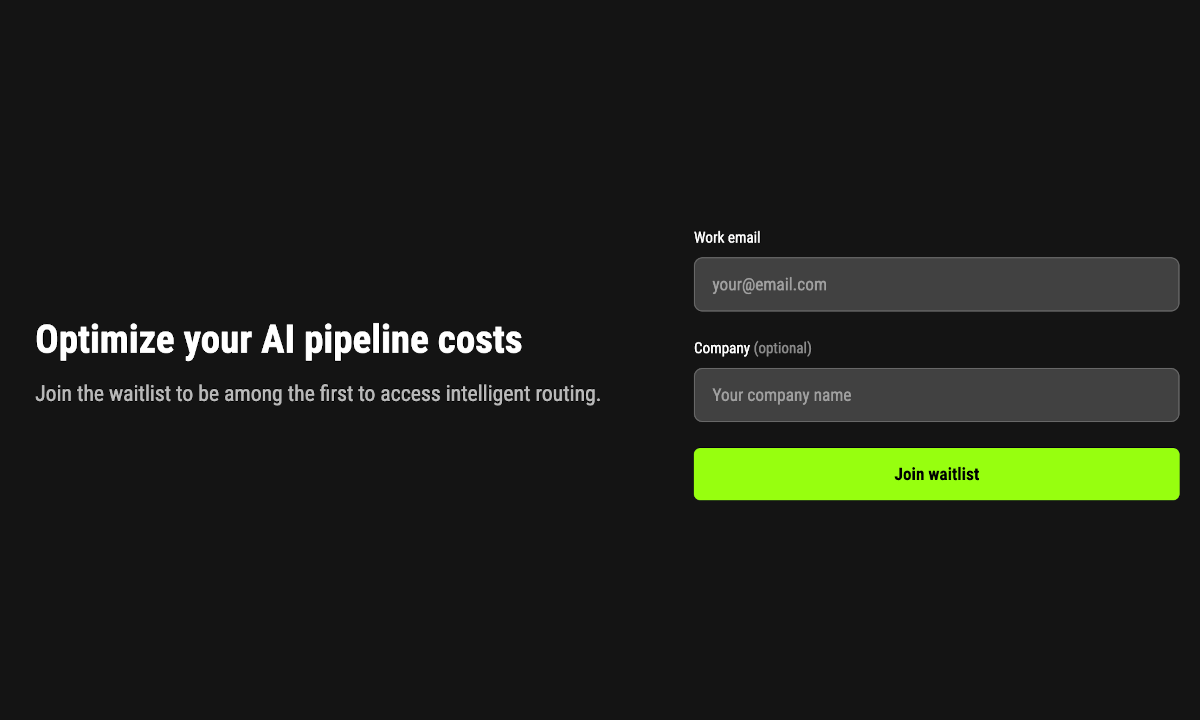

We are opening early access to companies processing significant token volumes. Early users will get priority onboarding and direct access to our founding team for implementation support. If you want better AI at half the cost, join the waitlist today.